Tuesday, 19th August 2025

r/ChatGPTPro: What is the most profitable thing you have done with ChatGPT? This Reddit thread - with 279 replies - offers a neat targeted insight into the kinds of things people are using ChatGPT for.

Lots of variety here but two themes that stood out for me were ChatGPT for written negotiation - insurance claims, breaking rental leases - and ChatGPT for career and business advice.

PyPI: Preventing Domain Resurrection Attacks (via) Domain resurrection attacks are a nasty vulnerability in systems that use email verification to allow people to recover their accounts. If somebody lets their domain name expire an attacker might snap it up and use it to gain access to their accounts - which can turn into a package supply chain attack if they had an account on something like the Python Package Index.

PyPI now protects against these by treating an email address as not-validated if the associated domain expires.

Since early June 2025, PyPI has unverified over 1,800 email addresses when their associated domains entered expiration phases. This isn't a perfect solution, but it closes off a significant attack vector where the majority of interactions would appear completely legitimate.

This attack is not theoretical: it happened to the ctx package on PyPI back in May 2022.

Here's the pull request from April in which Mike Fiedler landed an integration which hits an API provided by Fastly's Domainr, followed by this PR which polls for domain status on any email domain that hasn't been checked in the past 30 days.

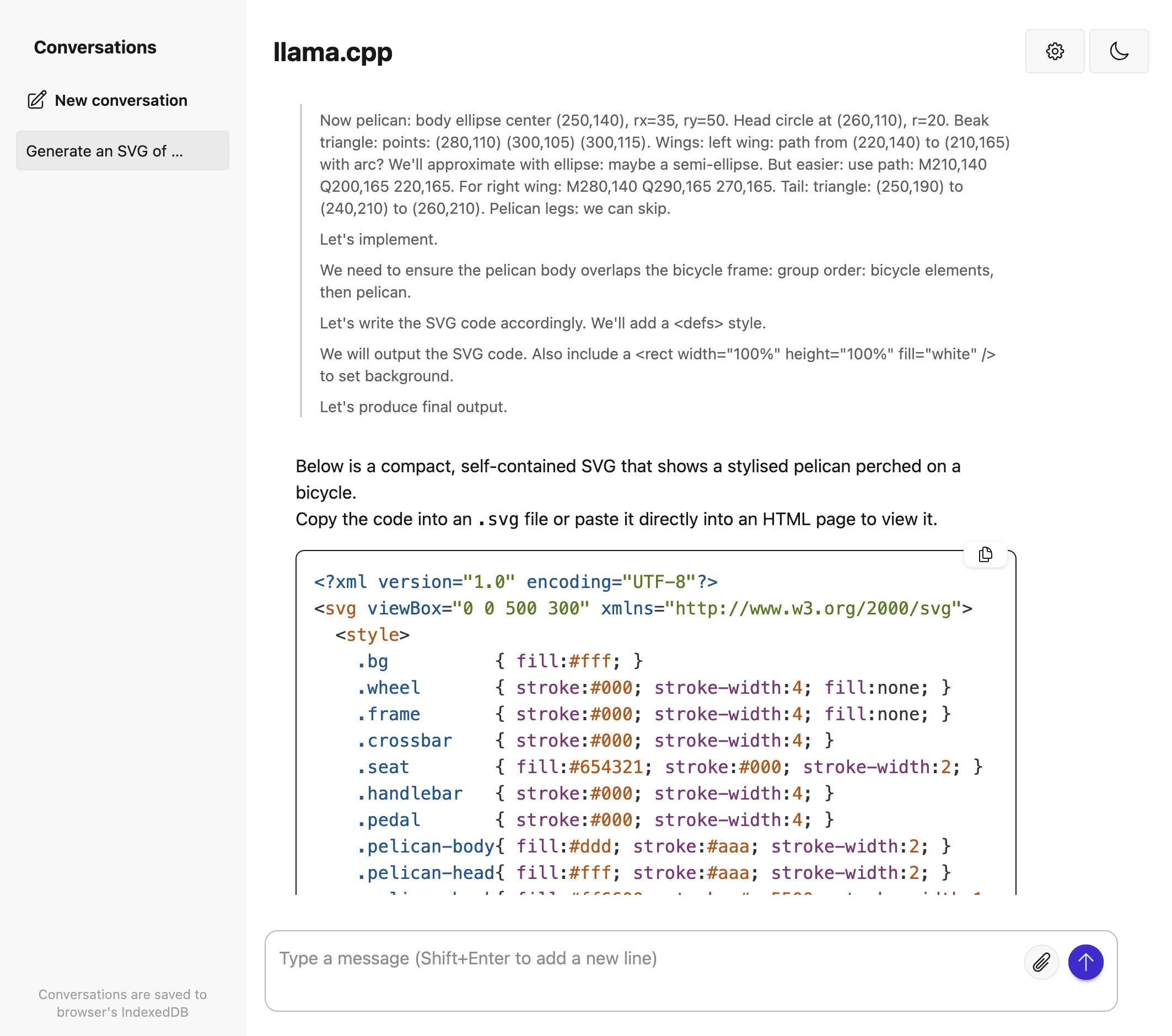

llama.cpp guide: running gpt-oss with llama.cpp

(via)

Really useful official guide to running the OpenAI gpt-oss models using llama-server from llama.cpp - which provides an OpenAI-compatible localhost API and a neat web interface for interacting with the models.

TLDR version for macOS to run the smaller gpt-oss-20b model:

brew install llama.cpp

llama-server -hf ggml-org/gpt-oss-20b-GGUF \

--ctx-size 0 --jinja -ub 2048 -b 2048 -ngl 99 -fa

This downloads a 12GB model file from ggml-org/gpt-oss-20b-GGUF on Hugging Face, stores it in ~/Library/Caches/llama.cpp/ and starts it running on port 8080.

You can then visit this URL to start interacting with the model:

http://localhost:8080/

On my 64GB M2 MacBook Pro it runs at around 82 tokens/second.

The guide also includes notes for running on NVIDIA and AMD hardware.

Today I learned - via a proposal to remove mentions of XSLT from the HTML spec - that congress.gov uses XSLT to serve XML bills as XHTML - here's H. R. 3617 117th CONGRESS 1st Session for example.

View source on that page and it starts like this:

<?xml version="1.0"?> <?xml-stylesheet type="text/xsl" href="billres.xsl"?> <!DOCTYPE bill PUBLIC "-//US Congress//DTDs/bill.dtd//EN" "bill.dtd"> <bill bill-stage="Introduced-in-House" dms-id="H5BD50AB7712141319B352D46135AAC2B" public-private="public" key="H" bill-type="olc"> <metadata xmlns:dc="http://purl.org/dc/elements/1.1/"> <dublinCore> <dc:title>117 HR 3617 IH: Marijuana Opportunity Reinvestment and Expungement Act of 2021</dc:title> <dc:publisher>U.S. House of Representatives</dc:publisher> <dc:date>2021-05-28</dc:date> <dc:format>text/xml</dc:format> <dc:language>EN</dc:language> <dc:rights>Pursuant to Title 17 Section 105 of the United States Code, this file is not subject to copyright protection and is in the public domain.</dc:rights> </dublinCore> </metadata> <form> <distribution-code display="yes">I</distribution-code> <congress display="yes">117th CONGRESS</congress><session display="yes">1st Session</session> <legis-num display="yes">H. R. 3617</legis-num> <current-chamber>IN THE HOUSE OF REPRESENTATIVES</current-chamber>

Digging into those XSLT stylesheets leads to billres-details.xsl - gist copy here - which starts with a huge changelog comment with notes dating all the way back to 2004!

Qwen-Image-Edit: Image Editing with Higher Quality and Efficiency.

As promised in their August 4th release of the Qwen image generation model, Qwen have now followed it up with a separate model, Qwen-Image-Edit, which can take an image and a prompt and return an edited version of that image.

Ivan Fioravanti upgraded his macOS qwen-image-mps tool (previously) to run the new model via a new edit command. Since it's now on PyPI you can run it directly using uvx like this:

uvx qwen-image-mps edit -i pelicans.jpg \

-p 'Give the pelicans rainbow colored plumage' -s 10

Be warned... it downloads a 54GB model file (to ~/.cache/huggingface/hub/models--Qwen--Qwen-Image-Edit) and appears to use all 64GB of my system memory - if you have less than 64GB it likely won't work, and I had to quit almost everything else on my system to give it space to run. A larger machine is almost required to use this.

I fed it this image:

The following prompt:

Give the pelicans rainbow colored plumage

And told it to use just 10 inference steps - the default is 50, but I didn't want to wait that long.

It still took nearly 25 minutes (on a 64GB M2 MacBook Pro) to produce this result:

To get a feel for how much dropping the inference steps affected things I tried the same prompt with the new "Image Edit" mode of Qwen's chat.qwen.ai, which I believe uses the same model. It gave me a result much faster that looked like this:

Update: I left the command running overnight without the -s 10 option - so it would use all 50 steps - and my laptop took 2 hours and 59 minutes to generate this image, which is much more photo-realistic and similar to the one produced by Qwen's hosted model:

Marko Simic reported that:

50 steps took 49min on my MBP M4 Max 128GB