OpenAI's gold medal performance on the International Math Olympiad. This feels notable to me. OpenAI research scientist Alexander Wei:

I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

We evaluated our models on the 2025 IMO problems under the same rules as human contestants: two 4.5 hour exam sessions, no tools or internet, reading the official problem statements, and writing natural language proofs. [...]

Besides the result itself, I am excited about our approach: We reach this capability level not via narrow, task-specific methodology, but by breaking new ground in general-purpose reinforcement learning and test-time compute scaling.

In our evaluation, the model solved 5 of the 6 problems on the 2025 IMO. For each problem, three former IMO medalists independently graded the model’s submitted proof, with scores finalized after unanimous consensus. The model earned 35/42 points in total, enough for gold!

HUGE congratulations to the team—Sheryl Hsu, Noam Brown, and the many giants whose shoulders we stood on—for turning this crazy dream into reality! I am lucky I get to spend late nights and early mornings working alongside the very best.

Btw, we are releasing GPT-5 soon, and we’re excited for you to try it. But just to be clear: the IMO gold LLM is an experimental research model. We don’t plan to release anything with this level of math capability for several months.

(Normally I would just link to the tweet, but in this case Alexander built a thread... and Twitter threads no longer work for linking as they're only visible to users with an active Twitter account.)

Here's Wikipedia on the International Mathematical Olympiad:

It is widely regarded as the most prestigious mathematical competition in the world. The first IMO was held in Romania in 1959. It has since been held annually, except in 1980. More than 100 countries participate. Each country sends a team of up to six students, plus one team leader, one deputy leader, and observers.

This year's event is in Sunshine Coast, Australia. Here's the web page for the event, which includes a button you can click to access a PDF of the six questions - maybe they don't link to that document directly to discourage it from being indexed.

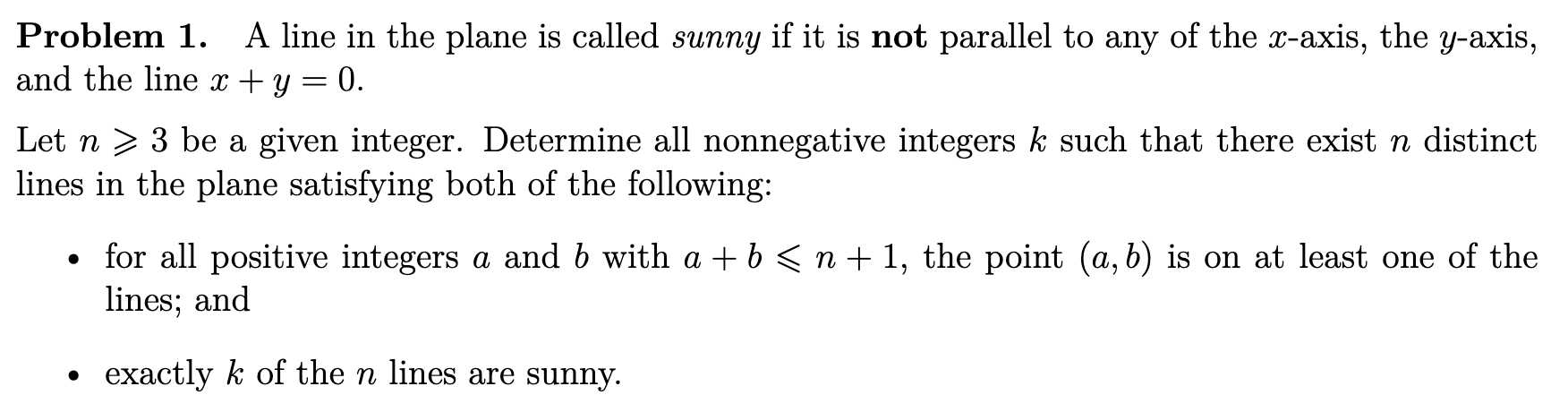

The first of the six questions looks like this:

Alexander shared the proofs produced by the model on GitHub. They're in a slightly strange format - not quite MathML embedded in Markdown - which Alexander excuses since "it is very much an experimental model".

The most notable thing about this is that the unnamed model achieved this score without using any tools. OpenAI's Sebastien Bubeck emphasizes that here:

Just to spell it out as clearly as possible: a next-word prediction machine (because that's really what it is here, no tools no nothing) just produced genuinely creative proofs for hard, novel math problems at a level reached only by an elite handful of pre‑college prodigies.

There's a bunch more useful context in this thread by Noam Brown, including a note that this model wasn't trained specifically for IMO problems:

Typically for these AI results, like in Go/Dota/Poker/Diplomacy, researchers spend years making an AI that masters one narrow domain and does little else. But this isn’t an IMO-specific model. It’s a reasoning LLM that incorporates new experimental general-purpose techniques.

So what’s different? We developed new techniques that make LLMs a lot better at hard-to-verify tasks. IMO problems were the perfect challenge for this: proofs are pages long and take experts hours to grade. Compare that to AIME, where answers are simply an integer from 0 to 999.

Also this model thinks for a long time. o1 thought for seconds. Deep Research for minutes. This one thinks for hours. Importantly, it’s also more efficient with its thinking. And there’s a lot of room to push the test-time compute and efficiency further.

It’s worth reflecting on just how fast AI progress has been, especially in math. In 2024, AI labs were using grade school math (GSM8K) as an eval in their model releases. Since then, we’ve saturated the (high school) MATH benchmark, then AIME, and now are at IMO gold. [...]

When you work at a frontier lab, you usually know where frontier capabilities are months before anyone else. But this result is brand new, using recently developed techniques. It was a surprise even to many researchers at OpenAI. Today, everyone gets to see where the frontier is.