Wednesday, 15th October 2025

A modern approach to preventing CSRF in Go

(via)

Alex Edwards writes about the new http.CrossOriginProtection middleware that was added to the Go standard library in version 1.25 in August and asks:

Have we finally reached the point where CSRF attacks can be prevented without relying on a token-based check (like double-submit cookies)?

It looks like the answer might be yes, which is extremely exciting. I've been tracking CSRF since I first learned about it 20 years ago in May 2005 and a cleaner solution than those janky hidden form fields would be very welcome.

The code for the new Go middleware lives in src/net/http/csrf.go. It works using the Sec-Fetch-Site HTTP header, which Can I Use shows as having 94.18% global availability - the holdouts are mainly IE11, iOS versions prior to iOS 17 (which came out in 2023 but can be installed on any phone released since 2017) and some other ancient browser versions.

If Sec-Fetch-Site is same-origin or none then the page submitting the form was either on the same origin or was navigated to directly by the user - in both cases safe from CSRF. If it's cross-site or same-site (tools.simonwillison.net and til.simonwillison.net are considered same-site but not same-origin) the submission is denied.

If that header isn't available the middleware falls back on comparing other headers: Origin - a value like https://simonwillison.net - with Host, a value like simonwillison.net. This should cover the tiny fraction of browsers that don't have the new header, though it's not clear to me if there are any weird edge-cases beyond that.

Note that this fallback comparison can't take the scheme into account since Host doesn't list that, so administrators are encouraged to use HSTS to protect against HTTP to HTTPS cross-origin requests.

On Lobste.rs I questioned if this would work for localhost, since that normally isn't served using HTTPS. Firefox security engineer Frederik Braun reassured me that *.localhost is treated as a Secure Context, so gets the Sec-Fetch-Site header despite not being served via HTTPS.

Update: Also relevant is Filippo Valsorda's article in CSRF which includes detailed research conducted as part of building the new Go middleware, plus this related Bluesky conversation about that research from six months ago.

Previous system cards have reported results on an expanded version of our earlier agentic misalignment evaluation suite: three families of exotic scenarios meant to elicit the model to commit blackmail, attempt a murder, and frame someone for financial crimes. We choose not to report full results here because, similarly to Claude Sonnet 4.5, Claude Haiku 4.5 showed many clear examples of verbalized evaluation awareness on all three of the scenarios tested in this suite. Since the suite only consisted of many similar variants of three core scenarios, we expect that the model maintained high unverbalized awareness across the board, and we do not trust it to be representative of behavior in the real extreme situations the suite is meant to emulate.

Introducing Claude Haiku 4.5 (via) Anthropic released Claude Haiku 4.5 today, the cheapest member of the Claude 4.5 family that started with Sonnet 4.5 a couple of weeks ago.

It's priced at $1/million input tokens and $5/million output tokens, slightly more expensive than Haiku 3.5 ($0.80/$4) and a lot more expensive than the original Claude 3 Haiku ($0.25/$1.25), both of which remain available at those prices.

It's a third of the price of Sonnet 4 and Sonnet 4.5 (both $3/$15) which is notable because Anthropic's benchmarks put it in a similar space to that older Sonnet 4 model. As they put it:

What was recently at the frontier is now cheaper and faster. Five months ago, Claude Sonnet 4 was a state-of-the-art model. Today, Claude Haiku 4.5 gives you similar levels of coding performance but at one-third the cost and more than twice the speed.

I've been hoping to see Anthropic release a fast, inexpensive model that's price competitive with the cheapest models from OpenAI and Gemini, currently $0.05/$0.40 (GPT-5-Nano) and $0.075/$0.30 (Gemini 2.0 Flash Lite). Haiku 4.5 certainly isn't that, it looks like they're continuing to focus squarely on the "great at code" part of the market.

The new Haiku is the first Haiku model to support reasoning. It sports a 200,000 token context window, 64,000 maximum output (up from just 8,192 for Haiku 3.5) and a "reliable knowledge cutoff" of February 2025, one month later than the January 2025 date for Sonnet 4 and 4.5 and Opus 4 and 4.1.

Something that caught my eye in the accompanying system card was this note about context length:

For Claude Haiku 4.5, we trained the model to be explicitly context-aware, with precise information about how much context-window has been used. This has two effects: the model learns when and how to wrap up its answer when the limit is approaching, and the model learns to continue reasoning more persistently when the limit is further away. We found this intervention—along with others—to be effective at limiting agentic “laziness” (the phenomenon where models stop working on a problem prematurely, give incomplete answers, or cut corners on tasks).

I've added the new price to llm-prices.com, released llm-anthropic 0.20 with the new model and updated my Haiku-from-your-webcam demo (source) to use Haiku 4.5 as well.

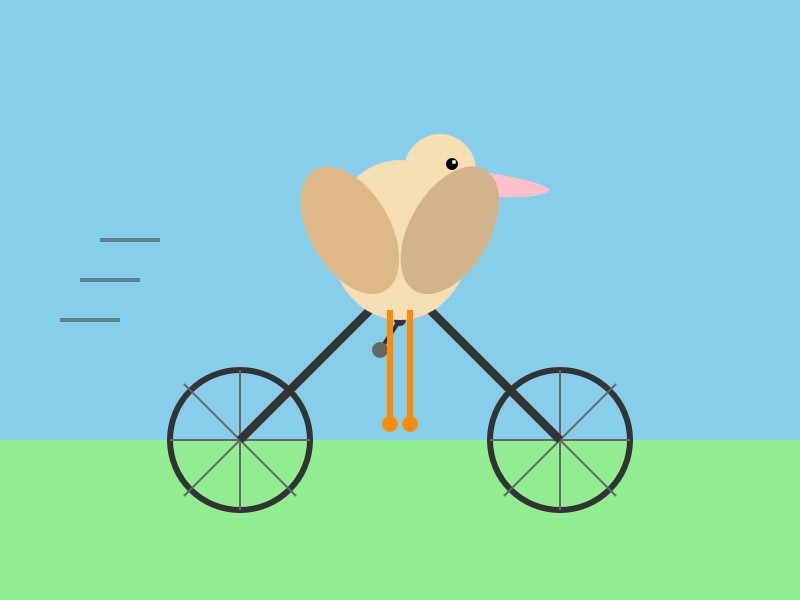

Here's llm -m claude-haiku-4.5 'Generate an SVG of a pelican riding a bicycle' (transcript).

18 input tokens and 1513 output tokens = 0.7583 cents.

While Sonnet 4.5 remains the default [in Claude Code], Haiku 4.5 now powers the Explore subagent which can rapidly gather context on your codebase to build apps even faster.

You can select Haiku 4.5 to be your default model in /model. When selected, you’ll automatically use Sonnet 4.5 in Plan mode and Haiku 4.5 for execution for smarter plans and faster results.

— Catherine Wu, Claude Code PM, Anthropic