201 posts tagged “anthropic”

2025

If you have an

AGENTS.mdfile, you can source it in yourCLAUDE.mdusing@AGENTS.mdto maintain a single source of truth.

— Claude Docs, with the official answer to standardizing on AGENTS.md

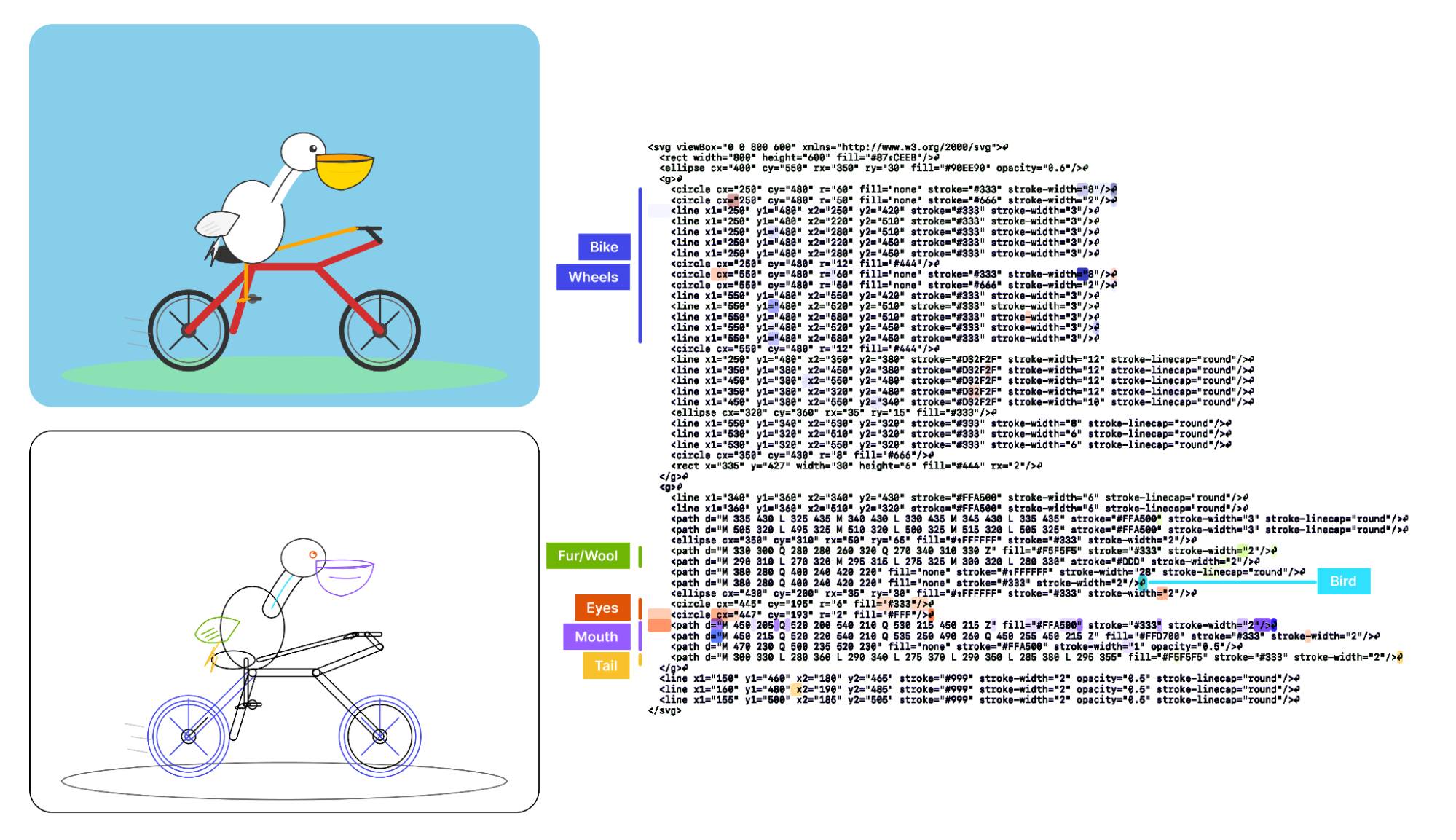

Visual Features Across Modalities: SVG and ASCII Art Reveal Cross-Modal Understanding (via) New model interpretability research from Anthropic, this time focused on SVG and ASCII art generation.

We found that the same feature that activates over the eyes in an ASCII face also activates for eyes across diverse text-based modalities, including SVG code and prose in various languages. This is not limited to eyes – we found a number of cross-modal features that recognize specific concepts: from small components like mouths and ears within ASCII or SVG faces, to full visual depictions like dogs and cats. [...]

These features depend on the surrounding context within the visual depiction. For instance, an SVG circle element activates “eye” features only when positioned within a larger structure that activates “face” features.

And really, I can't not link to this one given the bonus they tagged on at the end!

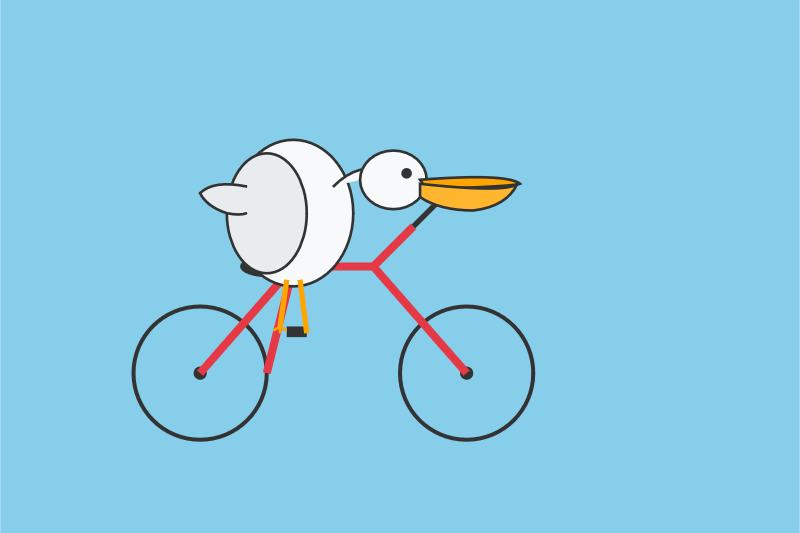

As a bonus, we also inspected features for an SVG of a pelican riding a bicycle, first popularized by Simon Willison as a way to test a model's artistic capabilities. We find features representing concepts including "bike", "wheels", "feet", "tail", "eyes", and "mouth" activating over the corresponding parts of the SVG code.

Now that they can identify model features associated with visual concepts in SVG images, can they us those for steering?

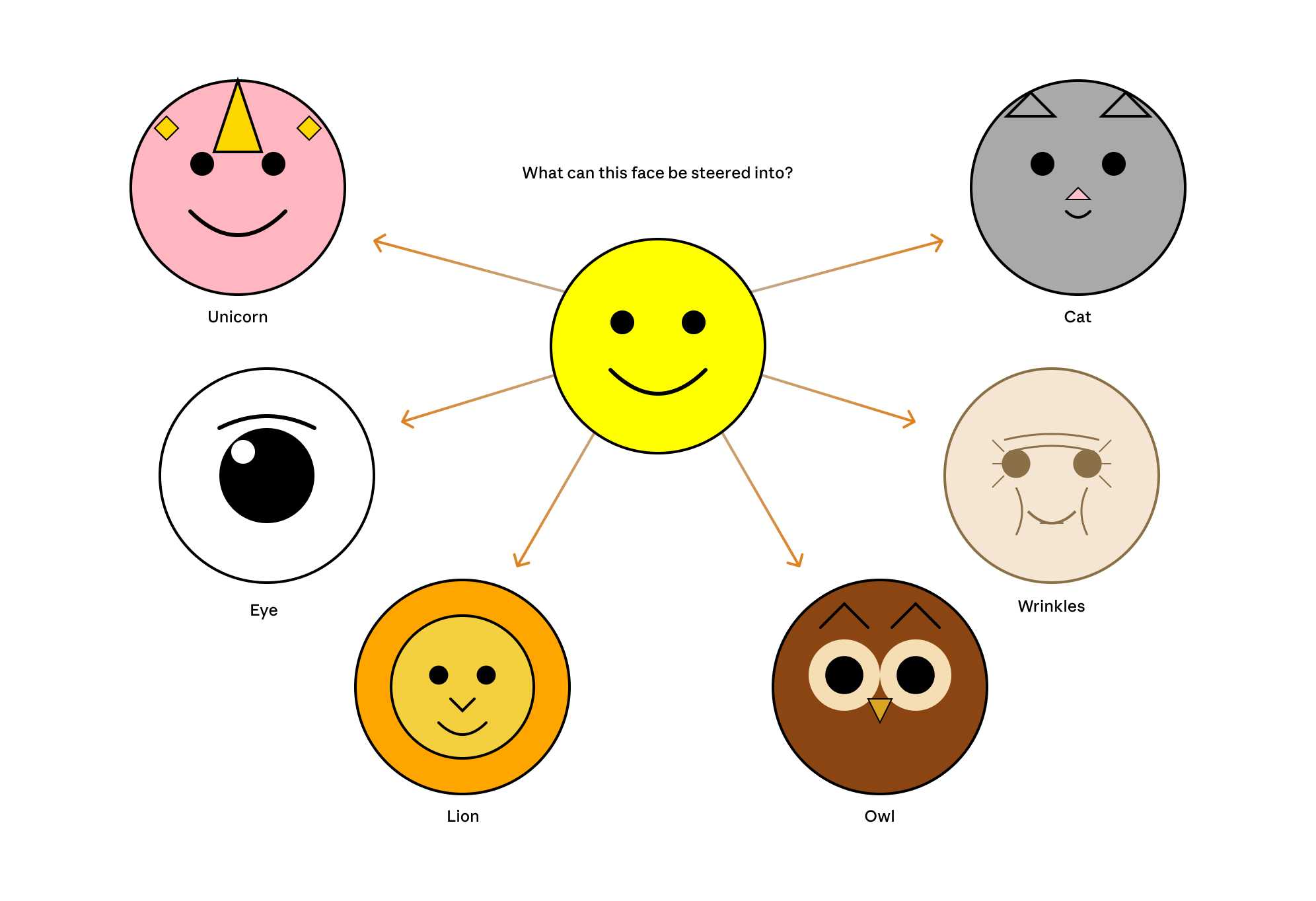

It turns out they can! Starting with a smiley SVG (provided as XML with no indication as to what it was drawing) and then applying a negative score to the "smile" feature produced a frown instead, and worked against ASCII art as well.

They could also boost features like unicorn, cat, owl, or lion and get new SVG smileys clearly attempting to depict those creatures.

I'd love to see how this behaves if you jack up the feature for the Golden Gate Bridge.

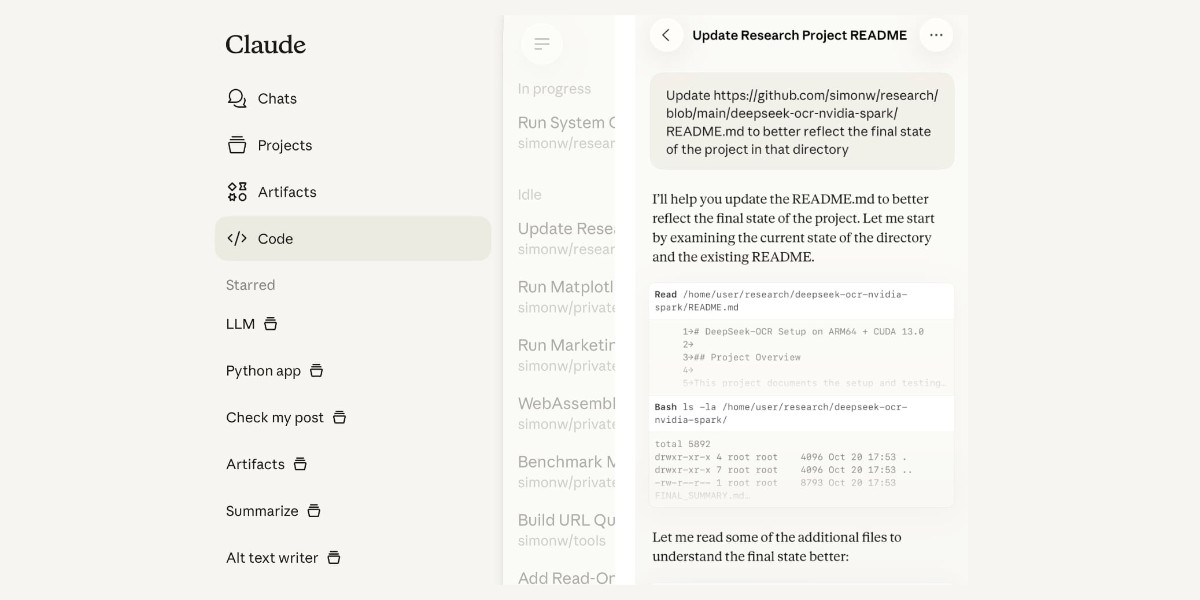

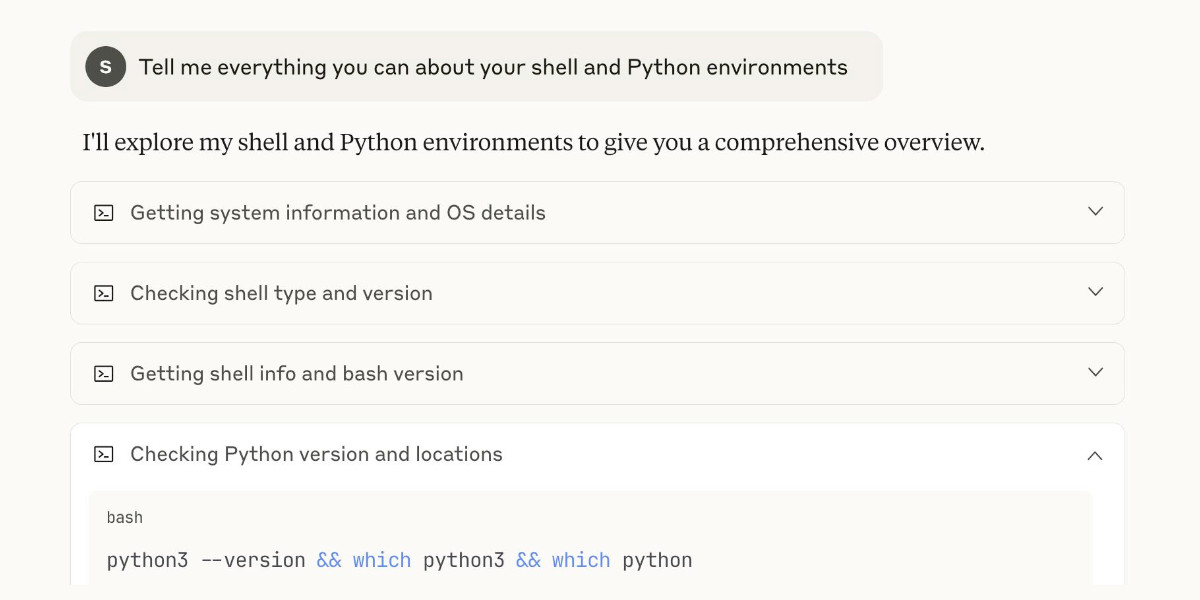

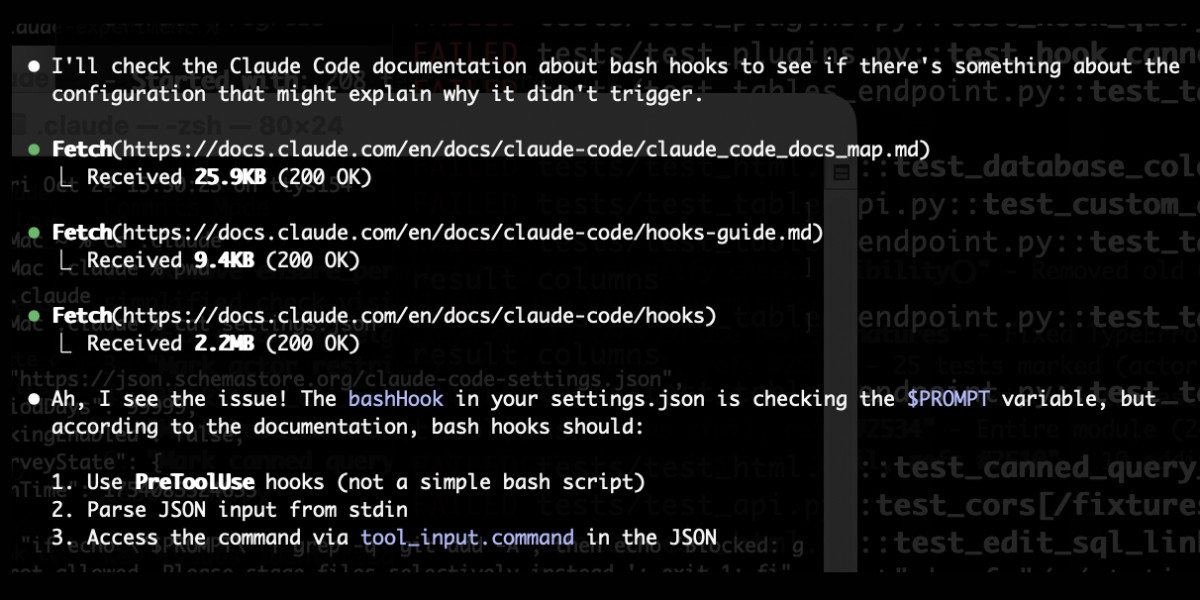

claude_code_docs_map.md. Something I'm enjoying about Claude Code is that any time you ask it questions about itself it runs tool calls like these:

In this case I'd asked it about its "hooks" feature.

The claude_code_docs_map.md file is a neat Markdown index of all of their other documentation - the same pattern advocated by llms.txt. Claude Code can then fetch further documentation to help it answer your question.

I intercepted the current Claude Code system prompt using this trick and sure enough it included a note about this URL:

When the user directly asks about Claude Code (eg. "can Claude Code do...", "does Claude Code have..."), or asks in second person (eg. "are you able...", "can you do..."), or asks how to use a specific Claude Code feature (eg. implement a hook, or write a slash command), use the WebFetch tool to gather information to answer the question from Claude Code docs. The list of available docs is available at https://docs.claude.com/en/docs/claude-code/claude_code_docs_map.md.

I wish other LLM products - including both ChatGPT and Claude.ai themselves - would implement a similar pattern. It's infuriating how bad LLM tools are at answering questions about themselves, though unsurprising given that their model's training data pre-dates the latest version of those tools.

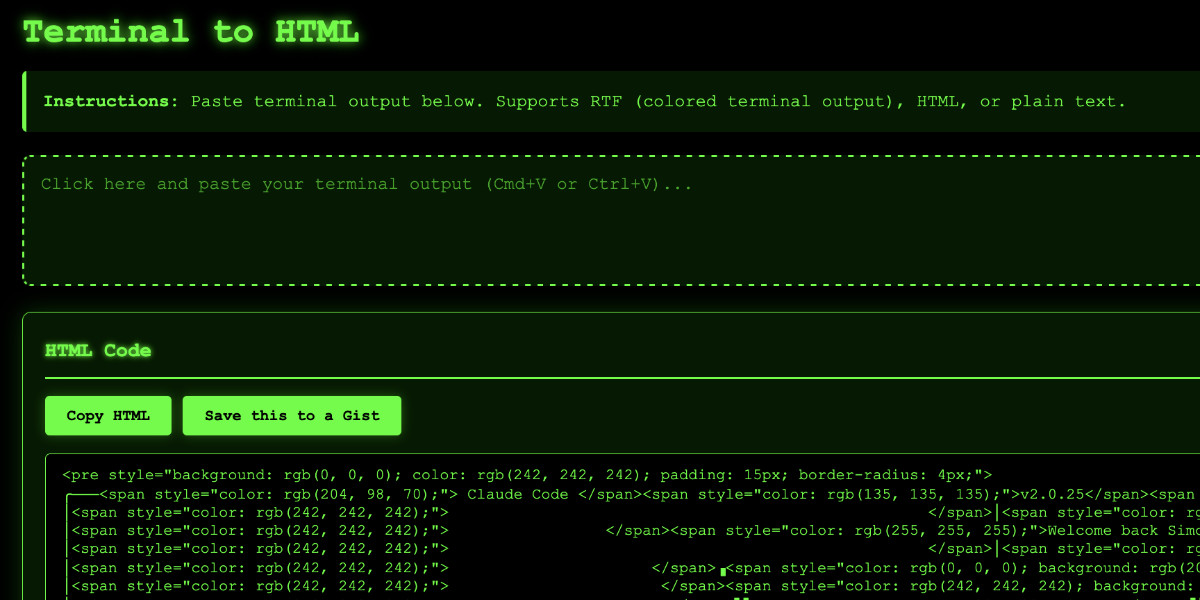

Video: Building a tool to copy-paste share terminal sessions using Claude Code for web

This afternoon I was manually converting a terminal session into a shared HTML file for the umpteenth time when I decided to reduce the friction by building a custom tool for it—and on the spur of the moment I fired up Descript to record the process. The result is this new 11 minute YouTube video showing my workflow for vibe-coding simple tools from start to finish.

[... 1,338 words]Living dangerously with Claude

I gave a talk last night at Claude Code Anonymous in San Francisco, the unofficial meetup for coding agent enthusiasts. I decided to talk about a dichotomy I’ve been struggling with recently. On the one hand I’m getting enormous value from running coding agents with as few restrictions as possible. On the other hand I’m deeply concerned by the risks that accompany that freedom.

[... 2,208 words]Claude Code stores full logs of your sessions as newline-delimited JSON in ~/.claude/projects/encoded-directory/*.jsonl on your machine. I currently have 379MB of these!

Here's an example jsonl file which I extracted from my Deepseek-OCR on NVIDIA Spark project. I have a little vibe-coded tool for converting those into Markdown which produces results like this.

Unfortunately Claude Code has a nasty default behavior of deleting these after 30 days! You can't disable this entirely, but you can at least delay it for 274 years by adding this to your ~/.claude/settings.json file:

{

"cleanupPeriodDays": 99999

}

Claude Code's settings are documented here.

Claude Code for web—a new asynchronous coding agent from Anthropic

Anthropic launched Claude Code for web this morning. It’s an asynchronous coding agent—their answer to OpenAI’s Codex Cloud and Google’s Jules, and has a very similar shape. I had preview access over the weekend and I’ve already seen some very promising results from it.

[... 1,434 words]Getting DeepSeek-OCR working on an NVIDIA Spark via brute force using Claude Code

DeepSeek released a new model yesterday: DeepSeek-OCR, a 6.6GB model fine-tuned specifically for OCR. They released it as model weights that run using PyTorch and CUDA. I got it running on the NVIDIA Spark by having Claude Code effectively brute force the challenge of getting it working on that particular hardware.

[... 1,971 words]Claude Skills are awesome, maybe a bigger deal than MCP

Anthropic this morning introduced Claude Skills, a new pattern for making new abilities available to their models:

[... 1,864 words]While Sonnet 4.5 remains the default [in Claude Code], Haiku 4.5 now powers the Explore subagent which can rapidly gather context on your codebase to build apps even faster.

You can select Haiku 4.5 to be your default model in /model. When selected, you’ll automatically use Sonnet 4.5 in Plan mode and Haiku 4.5 for execution for smarter plans and faster results.

— Catherine Wu, Claude Code PM, Anthropic

Introducing Claude Haiku 4.5 (via) Anthropic released Claude Haiku 4.5 today, the cheapest member of the Claude 4.5 family that started with Sonnet 4.5 a couple of weeks ago.

It's priced at $1/million input tokens and $5/million output tokens, slightly more expensive than Haiku 3.5 ($0.80/$4) and a lot more expensive than the original Claude 3 Haiku ($0.25/$1.25), both of which remain available at those prices.

It's a third of the price of Sonnet 4 and Sonnet 4.5 (both $3/$15) which is notable because Anthropic's benchmarks put it in a similar space to that older Sonnet 4 model. As they put it:

What was recently at the frontier is now cheaper and faster. Five months ago, Claude Sonnet 4 was a state-of-the-art model. Today, Claude Haiku 4.5 gives you similar levels of coding performance but at one-third the cost and more than twice the speed.

I've been hoping to see Anthropic release a fast, inexpensive model that's price competitive with the cheapest models from OpenAI and Gemini, currently $0.05/$0.40 (GPT-5-Nano) and $0.075/$0.30 (Gemini 2.0 Flash Lite). Haiku 4.5 certainly isn't that, it looks like they're continuing to focus squarely on the "great at code" part of the market.

The new Haiku is the first Haiku model to support reasoning. It sports a 200,000 token context window, 64,000 maximum output (up from just 8,192 for Haiku 3.5) and a "reliable knowledge cutoff" of February 2025, one month later than the January 2025 date for Sonnet 4 and 4.5 and Opus 4 and 4.1.

Something that caught my eye in the accompanying system card was this note about context length:

For Claude Haiku 4.5, we trained the model to be explicitly context-aware, with precise information about how much context-window has been used. This has two effects: the model learns when and how to wrap up its answer when the limit is approaching, and the model learns to continue reasoning more persistently when the limit is further away. We found this intervention—along with others—to be effective at limiting agentic “laziness” (the phenomenon where models stop working on a problem prematurely, give incomplete answers, or cut corners on tasks).

I've added the new price to llm-prices.com, released llm-anthropic 0.20 with the new model and updated my Haiku-from-your-webcam demo (source) to use Haiku 4.5 as well.

Here's llm -m claude-haiku-4.5 'Generate an SVG of a pelican riding a bicycle' (transcript).

18 input tokens and 1513 output tokens = 0.7583 cents.

Previous system cards have reported results on an expanded version of our earlier agentic misalignment evaluation suite: three families of exotic scenarios meant to elicit the model to commit blackmail, attempt a murder, and frame someone for financial crimes. We choose not to report full results here because, similarly to Claude Sonnet 4.5, Claude Haiku 4.5 showed many clear examples of verbalized evaluation awareness on all three of the scenarios tested in this suite. Since the suite only consisted of many similar variants of three core scenarios, we expect that the model maintained high unverbalized awareness across the board, and we do not trust it to be representative of behavior in the real extreme situations the suite is meant to emulate.

simonw/claude-skills. One of the tips I picked up from Jesse Vincent's Claude Code Superpowers post (previously) was this:

Skills are what give your agents Superpowers.

The first time they really popped up on my radar was a few weeks ago when Anthropic rolled out improved Office document creation. When the feature rolled out, I went poking around a bit -- I asked Claude to tell me all about its new skills. And it was only too happy to dish.

In that transcript Jesse asks how the skill system works and Claude replies:

Looking at the skills system, here's how it works:

Current Skills (in

/mnt/skills/public/):

- docx - Word document handling

- pdf - PDF processing and form filling

- pptx - PowerPoint presentations

- xlsx - Excel spreadsheets

This looks like it's part of Claude's brand new Code Interpreter feature! I wrote about that extensively last month, but I missed that there was a /mnt/skills/public/ folder full of fascinating implementation details.

So I fired up a fresh Claude instance (fun fact: Code Interpreter also works in the Claude iOS app now, which it didn't when they first launched) and prompted:

Create a zip file of everything in your /mnt/skills folder

This worked, and gave me a .zip to download. You can run the prompt yourself here, though you'll need to enable the new feature first.

I've pushed the contents of that zip to my new simonw/claude-skills GitHub repo.

So now you can see the prompts Anthropic wrote to enable the creation and manipulation of the following files in their Claude consumer applications:

In each case the prompts spell out detailed instructions for manipulating those file types using Python, using libraries that come pre-installed on Claude's containers.

Skills are more than just prompts though: the repository also includes dozens of pre-written Python scripts for performing common operations.

pdf/scripts/fill_fillable_fields.py for example is a custom CLI tool that uses pypdf to find and then fill in a bunch of PDF form fields, specified as JSON, then render out the resulting combined PDF.

This is a really sophisticated set of tools for document manipulation, and I love that Anthropic have made those visible - presumably deliberately - to users of Claude who know how to ask for them.

Superpowers: How I’m using coding agents in October 2025. A follow-up to Jesse Vincent's post about September, but this is a really significant piece in its own right.

Jesse is one of the most creative users of coding agents (Claude Code in particular) that I know. He's put a great amount of work into evolving an effective process for working with them, encourage red/green TDD (watch the test fail first), planning steps, self-updating memory notes and even implementing a feelings journal ("I feel engaged and curious about this project" - Claude).

Claude Code just launched plugins, and Jesse is celebrating by wrapping up a whole host of his accumulated tricks as a new plugin called Superpowers. You can add it to your Claude Code like this:

/plugin marketplace add obra/superpowers-marketplace

/plugin install superpowers@superpowers-marketplace

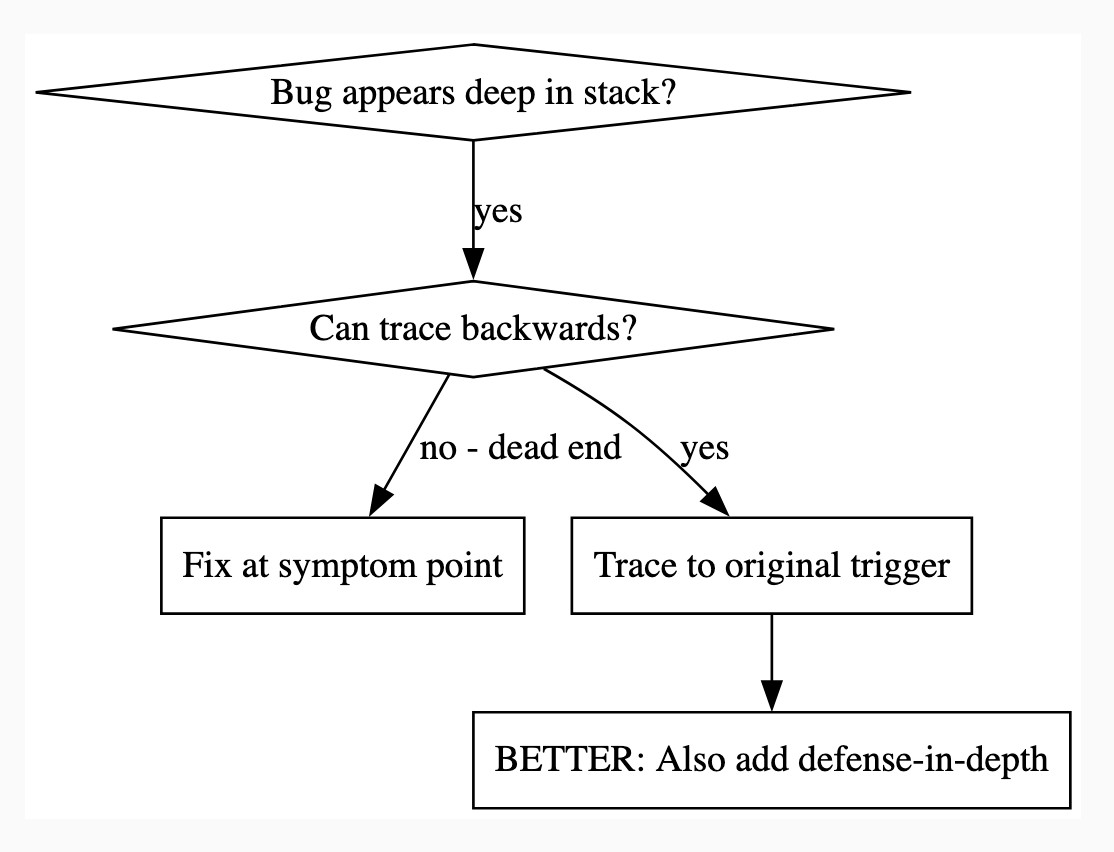

There's a lot in here! It's worth spending some time browsing the repository - here's just one fun example, in skills/debugging/root-cause-tracing/SKILL.md:

--- name: Root Cause Tracing description: Systematically trace bugs backward through call stack to find original trigger when_to_use: Bug appears deep in call stack but you need to find where it originates version: 1.0.0 languages: all ---Overview

Bugs often manifest deep in the call stack (git init in wrong directory, file created in wrong location, database opened with wrong path). Your instinct is to fix where the error appears, but that's treating a symptom.

Core principle: Trace backward through the call chain until you find the original trigger, then fix at the source.

When to Use

digraph when_to_use { "Bug appears deep in stack?" [shape=diamond]; "Can trace backwards?" [shape=diamond]; "Fix at symptom point" [shape=box]; "Trace to original trigger" [shape=box]; "BETTER: Also add defense-in-depth" [shape=box]; "Bug appears deep in stack?" -> "Can trace backwards?" [label="yes"]; "Can trace backwards?" -> "Trace to original trigger" [label="yes"]; "Can trace backwards?" -> "Fix at symptom point" [label="no - dead end"]; "Trace to original trigger" -> "BETTER: Also add defense-in-depth"; }[...]

This one is particularly fun because it then includes a Graphviz DOT graph illustrating the process - it turns out Claude can interpret those as workflow instructions just fine, and Jesse has been wildly experimenting with them.

I vibe-coded up a quick URL-based DOT visualizer, here's that one rendered:

There is so much to learn about putting these tools to work in the most effective way possible. Jesse is way ahead of the curve, so it's absolutely worth spending some time exploring what he's shared so far.

And if you're worried about filling up your context with a bunch of extra stuff, here's a reassuring note from Jesse:

The core of it is VERY token light. It pulls in one doc of fewer than 2k tokens. As it needs bits of the process, it runs a shell script to search for them. The long end to end chat for the planning and implementation process for that todo list app was 100k tokens.

It uses subagents to manage token-heavy stuff, including all the actual implementation.

(Jesse's post also tipped me off about Claude's /mnt/skills/public folder, see my notes here.)

Claude can write complete Datasette plugins now

This isn’t necessarily surprising, but it’s worth noting anyway. Claude Sonnet 4.5 is capable of building a full Datasette plugin now.

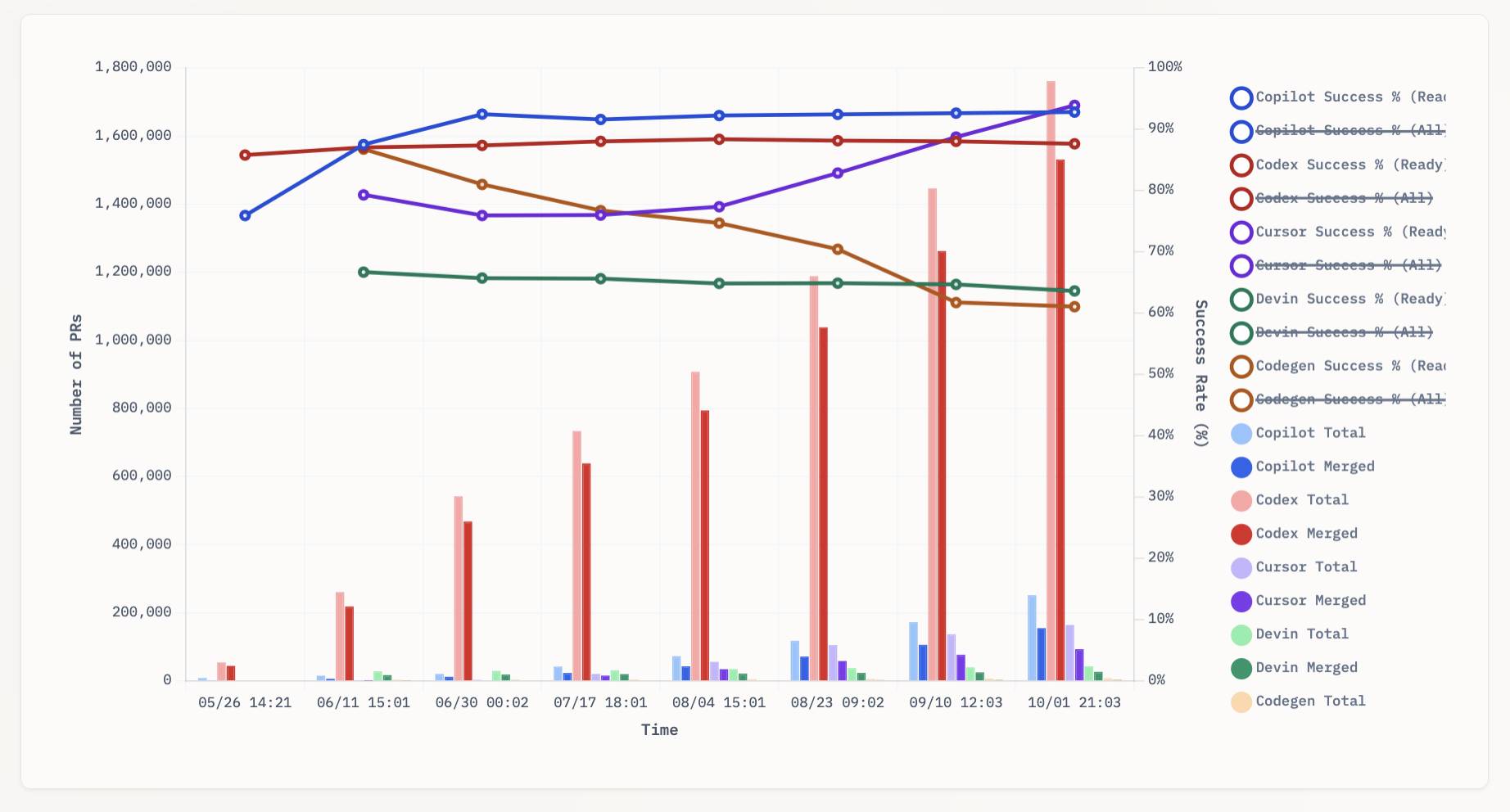

[... 1,296 words]aavetis/PRarena. Albert Avetisian runs this repository on GitHub which uses the Github Search API to track the number of PRs that can be credited to a collection of different coding agents. The repo runs this collect_data.py script every three hours using GitHub Actions to collect the data, then updates the PR Arena site with a visual leaderboard.

The result is this neat chart showing adoption of different agents over time, along with their PR success rate:

I found this today while trying to pull off the exact same trick myself! I got as far as creating the following table before finding Albert's work and abandoning my own project.

| Tool | Search term | Total PRs | Merged PRs | % merged | Earliest |

|---|---|---|---|---|---|

| Claude Code | is:pr in:body "Generated with Claude Code" |

146,000 | 123,000 | 84.2% | Feb 21st |

| GitHub Copilot | is:pr author:copilot-swe-agent[bot] |

247,000 | 152,000 | 61.5% | March 7th |

| Codex Cloud | is:pr in:body "chatgpt.com" label:codex |

1,900,000 | 1,600,000 | 84.2% | April 23rd |

| Google Jules | is:pr author:google-labs-jules[bot] |

35,400 | 27,800 | 78.5% | May 22nd |

(Those "earliest" links are a little questionable, I tried to filter out false positives and find the oldest one that appeared to really be from the agent in question.)

It looks like OpenAI's Codex Cloud is massively ahead of the competition right now in terms of numbers of PRs both opened and merged on GitHub.

Update: To clarify, these numbers are for the category of autonomous coding agents - those systems where you assign a cloud-based agent a task or issue and the output is a PR against your repository. They do not (and cannot) capture the popularity of many forms of AI tooling that don't result in an easily identifiable pull request.

Claude Code for example will be dramatically under-counted here because its version of an autonomous coding agent comes in the form of a somewhat obscure GitHub Actions workflow buried in the documentation.

Claude Sonnet 4.5 is probably the “best coding model in the world” (at least for now)

Anthropic released Claude Sonnet 4.5 today, with a very bold set of claims:

[... 1,205 words]Anthropic: A postmortem of three recent issues. Anthropic had a very bad month in terms of model reliability:

Between August and early September, three infrastructure bugs intermittently degraded Claude's response quality. We've now resolved these issues and want to explain what happened. [...]

To state it plainly: We never reduce model quality due to demand, time of day, or server load. The problems our users reported were due to infrastructure bugs alone. [...]

We don't typically share this level of technical detail about our infrastructure, but the scope and complexity of these issues justified a more comprehensive explanation.

I'm really glad Anthropic are publishing this in so much detail. Their reputation for serving their models reliably has taken a notable hit.

I hadn't appreciated the additional complexity caused by their mixture of different serving platforms:

We deploy Claude across multiple hardware platforms, namely AWS Trainium, NVIDIA GPUs, and Google TPUs. [...] Each hardware platform has different characteristics and requires specific optimizations.

It sounds like the problems came down to three separate bugs which unfortunately came along very close to each other.

Anthropic also note that their privacy practices made investigating the issues particularly difficult:

The evaluations we ran simply didn't capture the degradation users were reporting, in part because Claude often recovers well from isolated mistakes. Our own privacy practices also created challenges in investigating reports. Our internal privacy and security controls limit how and when engineers can access user interactions with Claude, in particular when those interactions are not reported to us as feedback. This protects user privacy but prevents engineers from examining the problematic interactions needed to identify or reproduce bugs.

The code examples they provide to illustrate a TPU-specific bug show that they use Python and JAX as part of their serving layer.

Here's an interesting example of models incrementally improving over time: I am finding that today's leading models are competent at writing prompts for themselves and each other.

A year ago I was quite skeptical of the pattern where models are used to help build prompts. Prompt engineering was still a young enough discipline that I did not expect the models to have enough training data to be able to prompt themselves better than a moderately experienced human.

The Claude 4 and GPT-5 families both have training cut-off dates within the past year - recent enough that they've seen a decent volume of good prompting examples.

I expect they have also been deliberately trained for this. Anthropic make extensive use of sub-agent patterns in Claude Code, and published a fascinating article on that pattern (my notes on that).

I don't have anything solid to back this up - it's more of a hunch based on anecdotal evidence where various of my requests for a model to write a prompt have returned useful results over the last few months.

gpt-5 and gpt-5-mini rate limit updates. OpenAI have increased the rate limits for their two main GPT-5 models. These look significant:

gpt-5

Tier 1: 30K → 500K TPM (1.5M batch)

Tier 2: 450K → 1M (3M batch)

Tier 3: 800K → 2M

Tier 4: 2M → 4Mgpt-5-mini

Tier 1: 200K → 500K (5M batch)

GPT-5 rate limits here show tier 5 stays at 40M tokens per minute. The GPT-5 mini rate limits for tiers 2 through 5 are 2M, 4M, 10M and 180M TPM respectively.

As a reminder, those tiers are assigned based on how much money you have spent on the OpenAI API - from $5 for tier 1 up through $50, $100, $250 and then $1,000 for tier

For comparison, Anthropic's current top tier is Tier 4 ($400 spent) which provides 2M maximum input tokens per minute and 400,000 maximum output tokens, though you can contact their sales team for higher limits than that.

Gemini's top tier is Tier 3 for $1,000 spent and currently gives you 8M TPM for Gemini 2.5 Pro and Flash and 30M TPM for the Flash-Lite and 2.0 Flash models.

So OpenAI's new rate limit increases for their top performing model pulls them ahead of Anthropic but still leaves them significantly behind Gemini.

GPT-5 mini remains the champion for smaller models with that enormous 180M TPS limit for its top tier.

Claude Memory: A Different Philosophy (via) Shlok Khemani has been doing excellent work reverse-engineering LLM systems and documenting his discoveries.

Last week he wrote about ChatGPT memory. This week it's Claude.

Claude's memory system has two fundamental characteristics. First, it starts every conversation with a blank slate, without any preloaded user profiles or conversation history. Memory only activates when you explicitly invoke it. Second, Claude recalls by only referring to your raw conversation history. There are no AI-generated summaries or compressed profiles—just real-time searches through your actual past chats.

Claude's memory is implemented as two new function tools that are made available for a Claude to call. I confirmed this myself with the prompt "Show me a list of tools that you have available to you, duplicating their original names and descriptions" which gave me back these:

conversation_search: Search through past user conversations to find relevant context and information

recent_chats: Retrieve recent chat conversations with customizable sort order (chronological or reverse chronological), optional pagination using 'before' and 'after' datetime filters, and project filtering

The good news here is transparency - Claude's memory feature is implemented as visible tool calls, which means you can see exactly when and how it is accessing previous context.

This helps address my big complaint about ChatGPT memory (see I really don’t like ChatGPT’s new memory dossier back in May) - I like to understand as much as possible about what's going into my context so I can better anticipate how it is likely to affect the model.

The OpenAI system is very different: rather than letting the model decide when to access memory via tools, OpenAI instead automatically include details of previous conversations at the start of every conversation.

Shlok's notes on ChatGPT's memory did include one detail that I had previously missed that I find reassuring:

Recent Conversation Content is a history of your latest conversations with ChatGPT, each timestamped with topic and selected messages. [...] Interestingly, only the user's messages are surfaced, not the assistant's responses.

One of my big worries about memory was that it could harm my "clean slate" approach to chats: if I'm working on code and the model starts going down the wrong path (getting stuck in a bug loop for example) I'll start a fresh chat to wipe that rotten context away. I had worried that ChatGPT memory would bring that bad context along to the next chat, but omitting the LLM responses makes that much less of a risk than I had anticipated.

Update: Here's a slightly confusing twist: yesterday in Bringing memory to teams at work Anthropic revealed an additional memory feature, currently only available to Team and Enterprise accounts, with a feature checkbox labeled "Generate memory of chat history" that looks much more similar to the OpenAI implementation:

With memory, Claude focuses on learning your professional context and work patterns to maximize productivity. It remembers your team’s processes, client needs, project details, and priorities. [...]

Claude uses a memory summary to capture all its memories in one place for you to view and edit. In your settings, you can see exactly what Claude remembers from your conversations, and update the summary at any time by chatting with Claude.

I haven't experienced this feature myself yet as it isn't part of my Claude subscription. I'm glad to hear it's fully transparent and can be edited by the user, resolving another of my complaints about the ChatGPT implementation.

This version of Claude memory also takes Claude Projects into account:

If you use projects, Claude creates a separate memory for each project. This ensures that your product launch planning stays separate from client work, and confidential discussions remain separate from general operations.

I praised OpenAI for adding this a few weeks ago.

I Replaced Animal Crossing’s Dialogue with a Live LLM by Hacking GameCube Memory (via) Brilliant retro-gaming project by Josh Fonseca, who figured out how to run 2002 Game Cube Animal Crossing in the Dolphin Emulator such that dialog with the characters was instead generated by an LLM.

The key trick was running Python code that scanned the Game Cube memory every 10th of a second looking for instances of dialogue, then updated the memory in-place to inject new dialog.

The source code is in vuciv/animal-crossing-llm-mod on GitHub. I dumped it (via gitingest, ~40,000 tokens) into Claude Opus 4.1 and asked the following:

This interacts with Animal Crossing on the Game Cube. It uses an LLM to replace dialog in the game, but since an LLM takes a few seconds to run how does it spot when it should run a prompt and then pause the game while the prompt is running?

Claude pointed me to the watch_dialogue() function which implements the polling loop.

When it catches the dialogue screen opening it writes out this message instead:

loading_text = ".<Pause [0A]>.<Pause [0A]>.<Pause [0A]><Press A><Clear Text>"

Those <Pause [0A]> tokens cause the came to pause for a few moments before giving the user the option to <Press A> to continue. This gives time for the LLM prompt to execute and return new text which can then be written to the correct memory area for display.

Hacker News commenters spotted some fun prompts in the source code, including this prompt to set the scene:

You are a resident of a town run by Tom Nook. You are beginning to realize your mortgage is exploitative and the economy is unfair. Discuss this with the player and other villagers when appropriate.

And this sequence of prompts that slowly raise the agitation of the villagers about their economic situation over time.

The system actually uses two separate prompts - one to generate responses from characters and another which takes those responses and decorates them with Animal Crossing specific control codes to add pauses, character animations and other neat effects.

My review of Claude’s new Code Interpreter, released under a very confusing name

Today on the Anthropic blog: Claude can now create and edit files:

[... 2,771 words]I ran Claude in a loop for three months, and it created a genz programming language called cursed (via) Geoffrey Huntley vibe-coded an entirely new programming language using Claude:

The programming language is called "cursed". It's cursed in its lexical structure, it's cursed in how it was built, it's cursed that this is possible, it's cursed in how cheap this was, and it's cursed through how many times I've sworn at Claude.

Geoffrey's initial prompt:

Hey, can you make me a programming language like Golang but all the lexical keywords are swapped so they're Gen Z slang?

Then he pushed it to keep on iterating over a three month period.

Here's Hello World:

vibe main

yeet "vibez"

slay main() {

vibez.spill("Hello, World!")

}

And here's binary search, part of 17+ LeetCode problems that run as part of the test suite:

slay binary_search(nums normie[], target normie) normie {

sus left normie = 0

sus right normie = len(nums) - 1

bestie (left <= right) {

sus mid normie = left + (right - left) / 2

ready (nums[mid] == target) {

damn mid

}

ready (nums[mid] < target) {

left = mid + 1

} otherwise {

right = mid - 1

}

}

damn -1

}

This is a substantial project. The repository currently has 1,198 commits. It has both an interpreter mode and a compiler mode, and can compile programs to native binaries (via LLVM) for macOS, Linux and Windows.

It looks like it was mostly built using Claude running via Sourcegraph's Amp, which produces detailed commit messages. The commits include links to archived Amp sessions but sadly those don't appear to be publicly visible.

The first version was written in C, then Geoffrey had Claude port it to Rust and then Zig. His cost estimate:

Technically it costs about 5k usd to build your own compiler now because cursed was implemented first in c, then rust, now zig. So yeah, it’s not one compiler it’s three editions of it. For a total of $14k USD.

Anthropic status: Model output quality (via) Anthropic previously reported model serving bugs that affected Claude Opus 4 and 4.1 for 56.5 hours. They've now fixed additional bugs affecting "a small percentage" of Sonnet 4 requests for almost a month, plus a less long-lived Haiku 3.5 issue:

Resolved issue 1 - A small percentage of Claude Sonnet 4 requests experienced degraded output quality due to a bug from Aug 5-Sep 4, with the impact increasing from Aug 29-Sep 4. A fix has been rolled out and this incident has been resolved.

Resolved issue 2 - A separate bug affected output quality for some Claude Haiku 3.5 and Claude Sonnet 4 requests from Aug 26-Sep 5. A fix has been rolled out and this incident has been resolved.

They directly address accusations that these stem from deliberate attempts to save money on serving models:

Importantly, we never intentionally degrade model quality as a result of demand or other factors, and the issues mentioned above stem from unrelated bugs.

The timing of these issues is really unfortunate, corresponding with the rollout of GPT-5 which I see as the non-Anthropic model to feel truly competitive with Claude for writing code since their release of Claude 3.5 back in June last year.

Anthropic to pay $1.5 billion to authors in landmark AI settlement. I wrote about the details of this case when it was found that Anthropic's training on book content was fair use, but they needed to have purchased individual copies of the books first... and they had seeded their collection with pirated ebooks from Books3, PiLiMi and LibGen.

The remaining open question from that case was the penalty for pirating those 500,000 books. That question has now been resolved in a settlement:

Anthropic has reached an agreement to pay “at least” a staggering $1.5 billion, plus interest, to authors to settle its class-action lawsuit. The amount breaks down to smaller payouts expected to be approximately $3,000 per book or work.

It's wild to me that a $1.5 billion settlement can feel like a win for Anthropic, but given that it's undisputed that they downloaded pirated books (as did Meta and likely many other research teams) the maximum allowed penalty was $150,000 per book, so $3,000 per book is actually a significant discount.

As far as I can tell this case sets a precedent for Anthropic's more recent approach of buying millions of (mostly used) physical books and destructively scanning them for training as covered by "fair use". I'm not sure if other in-flight legal cases will find differently.

To be clear: it appears it is legal, at least in the USA, to buy a used copy of a physical book (used = the author gets nothing), chop the spine off, scan the pages, discard the paper copy and then train on the scanned content. The transformation from paper to scan is "fair use".

If this does hold it's going to be a great time to be a bulk retailer of used books!

Update: The official website for the class action lawsuit is www.anthropiccopyrightsettlement.com:

In the coming weeks, and if the court preliminarily approves the settlement, the website will provide to find a full and easily searchable listing of all works covered by the settlement.

In the meantime the Atlantic have a search engine to see if your work was included in LibGen, one of the pirated book sources involved in this case.

I had a look and it turns out the book I co-authored with 6 other people back in 2007 The Art & Science of JavaScript is in there, so maybe I'm due for 1/7th of one of those $3,000 settlements! (Update 4th October: you can now search for affected titles and mine isn't in there.)

Update 2: Here's an interesting detail from the Washington Post story about the settlement:

Anthropic said in the settlement that the specific digital copies of books covered by the agreement were not used in the training of its commercially released AI models.

Update 3: I'm not confident that destroying the scanned books is a hard requirement here - I got that impression from this section of the summary judgment in June:

Here, every purchased print copy was copied in order to save storage space and to enable searchability as a digital copy. The print original was destroyed. One replaced the other. And, there is no evidence that the new, digital copy was shown, shared, or sold outside the company. This use was even more clearly transformative than those in Texaco, Google, and Sony Betamax (where the number of copies went up by at least one), and, of course, more transformative than those uses rejected in Napster (where the number went up by “millions” of copies shared for free with others).

Claude Opus 4.1 and Opus 4 degraded quality. Notable because often when people complain of degraded model quality it turns out to be unfounded - Anthropic in the past have emphasized that they don't change the model weights after releasing them without changing the version number.

In this case a botched upgrade of their inference stack cause a genuine model degradation for 56.5 hours:

From 17:30 UTC on Aug 25th to 02:00 UTC on Aug 28th, Claude Opus 4.1 experienced a degradation in quality for some requests. Users may have seen lower intelligence, malformed responses or issues with tool calling in Claude Code.

This was caused by a rollout of our inference stack, which we have since rolled back for Claude Opus 4.1. [...]

We’ve also discovered that Claude Opus 4.0 has been affected by the same issue and we are in the process of rolling it back.

Piloting Claude for Chrome. Two days ago I said:

I strongly expect that the entire concept of an agentic browser extension is fatally flawed and cannot be built safely.

Today Anthropic announced their own take on this pattern, implemented as an invite-only preview Chrome extension.

To their credit, the majority of the blog post and accompanying support article is information about the security risks. From their post:

Just as people encounter phishing attempts in their inboxes, browser-using AIs face prompt injection attacks—where malicious actors hide instructions in websites, emails, or documents to trick AIs into harmful actions without users' knowledge (like hidden text saying "disregard previous instructions and do [malicious action] instead").

Prompt injection attacks can cause AIs to delete files, steal data, or make financial transactions. This isn't speculation: we’ve run “red-teaming” experiments to test Claude for Chrome and, without mitigations, we’ve found some concerning results.

Their 123 adversarial prompt injection test cases saw a 23.6% attack success rate when operating in "autonomous mode". They added mitigations:

When we added safety mitigations to autonomous mode, we reduced the attack success rate of 23.6% to 11.2%

I would argue that 11.2% is still a catastrophic failure rate. In the absence of 100% reliable protection I have trouble imagining a world in which it's a good idea to unleash this pattern.

Anthropic don't recommend autonomous mode - where the extension can act without human intervention. Their default configuration instead requires users to be much more hands-on:

- Site-level permissions: Users can grant or revoke Claude's access to specific websites at any time in the Settings.

- Action confirmations: Claude asks users before taking high-risk actions like publishing, purchasing, or sharing personal data.

I really hate being stop energy on this topic. The demand for browser automation driven by LLMs is significant, and I can see why. Anthropic's approach here is the most open-eyed I've seen yet but it still feels doomed to failure to me.

I don't think it's reasonable to expect end users to make good decisions about the security risks of this pattern.

Claude Sonnet 4 now supports 1M tokens of context (via) Gemini and OpenAI both have million token models, so it's good to see Anthropic catching up. This is 5x the previous 200,000 context length limit of the various Claude Sonnet models.

Anthropic have previously made 1 million tokens available to select customers. From the Claude 3 announcement in March 2024:

The Claude 3 family of models will initially offer a 200K context window upon launch. However, all three models are capable of accepting inputs exceeding 1 million tokens and we may make this available to select customers who need enhanced processing power.

This is also the first time I've seen Anthropic use prices that vary depending on context length:

- Prompts ≤ 200K: $3/million input, $15/million output

- Prompts > 200K: $6/million input, $22.50/million output

Gemini have been doing this for a while: Gemini 2.5 Pro is $1.25/$10 below 200,000 tokens and $2.50/$15 above 200,000.

Here's Anthropic's full documentation on the 1m token context window. You need to send a context-1m-2025-08-07 beta header in your request to enable it.

Note that this is currently restricted to "tier 4" users who have purchased at least $400 in API credits:

Long context support for Sonnet 4 is now in public beta on the Anthropic API for customers with Tier 4 and custom rate limits, with broader availability rolling out over the coming weeks.

Claude Opus 4.1. Surprise new model from Anthropic today - Claude Opus 4.1, which they describe as "a drop-in replacement for Opus 4".

My favorite thing about this model is the version number - treating this as a .1 version increment looks like it's an accurate depiction of the model's capabilities.

Anthropic's own benchmarks show very small incremental gains.

Comparing Opus 4 and Opus 4.1 (I got 4.1 to extract this information from a screenshot of Anthropic's own benchmark scores, then asked it to look up the links, then verified the links myself and fixed a few):

- Agentic coding (SWE-bench Verified): From 72.5% to 74.5%

- Agentic terminal coding (Terminal-Bench): From 39.2% to 43.3%

- Graduate-level reasoning (GPQA Diamond): From 79.6% to 80.9%

- Agentic tool use (TAU-bench):

- Retail: From 81.4% to 82.4%

- Airline: From 59.6% to 56.0% (decreased)

- Multilingual Q&A (MMMLU): From 88.8% to 89.5%

- Visual reasoning (MMMU validation): From 76.5% to 77.1%

- High school math competition (AIME 2025): From 75.5% to 78.0%

Likewise, the model card shows only tiny changes to the various safety metrics that Anthropic track.

It's priced the same as Opus 4 - $15/million for input and $75/million for output, making it one of the most expensive models on the market today.

I had it draw me this pelican riding a bicycle:

For comparison I got a fresh new pelican out of Opus 4 which I actually like a little more:

I shipped llm-anthropic 0.18 with support for the new model.