10 posts tagged “mathematics”

2025

OpenAI’s gold medal performance on the International Math Olympiad. This feels notable to me. OpenAI research scientist Alexander Wei:

I’m excited to share that our latest @OpenAI experimental reasoning LLM has achieved a longstanding grand challenge in AI: gold medal-level performance on the world’s most prestigious math competition—the International Math Olympiad (IMO).

We evaluated our models on the 2025 IMO problems under the same rules as human contestants: two 4.5 hour exam sessions, no tools or internet, reading the official problem statements, and writing natural language proofs. [...]

Besides the result itself, I am excited about our approach: We reach this capability level not via narrow, task-specific methodology, but by breaking new ground in general-purpose reinforcement learning and test-time compute scaling.

In our evaluation, the model solved 5 of the 6 problems on the 2025 IMO. For each problem, three former IMO medalists independently graded the model’s submitted proof, with scores finalized after unanimous consensus. The model earned 35/42 points in total, enough for gold!

HUGE congratulations to the team—Sheryl Hsu, Noam Brown, and the many giants whose shoulders we stood on—for turning this crazy dream into reality! I am lucky I get to spend late nights and early mornings working alongside the very best.

Btw, we are releasing GPT-5 soon, and we’re excited for you to try it. But just to be clear: the IMO gold LLM is an experimental research model. We don’t plan to release anything with this level of math capability for several months.

(Normally I would just link to the tweet, but in this case Alexander built a thread... and Twitter threads no longer work for linking as they're only visible to users with an active Twitter account.)

Here's Wikipedia on the International Mathematical Olympiad:

It is widely regarded as the most prestigious mathematical competition in the world. The first IMO was held in Romania in 1959. It has since been held annually, except in 1980. More than 100 countries participate. Each country sends a team of up to six students, plus one team leader, one deputy leader, and observers.

This year's event is in Sunshine Coast, Australia. Here's the web page for the event, which includes a button you can click to access a PDF of the six questions - maybe they don't link to that document directly to discourage it from being indexed.

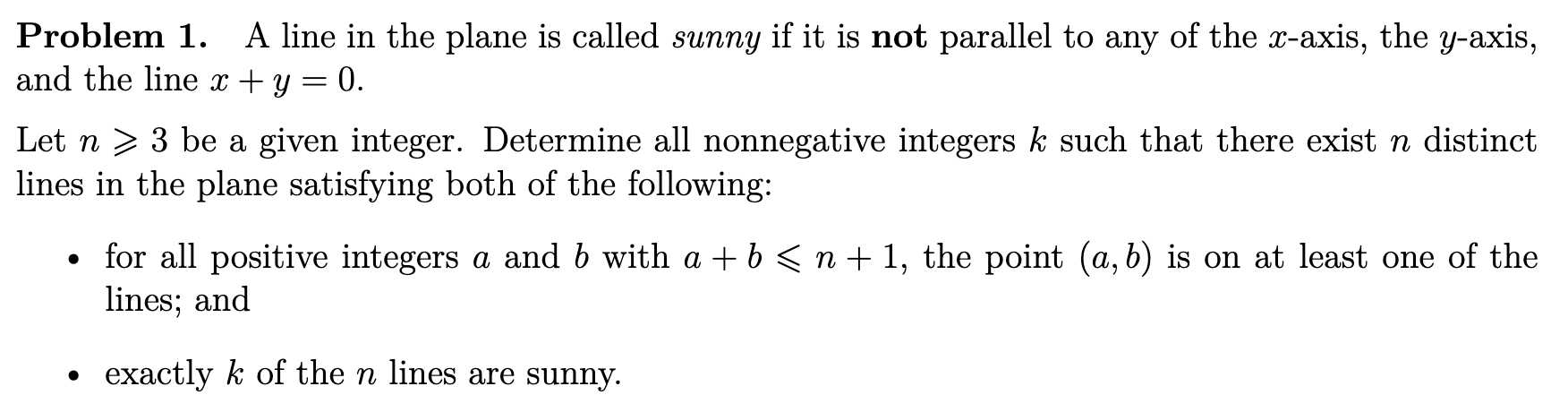

The first of the six questions looks like this:

Alexander shared the proofs produced by the model on GitHub. They're in a slightly strange format - not quite MathML embedded in Markdown - which Alexander excuses since "it is very much an experimental model".

The most notable thing about this is that the unnamed model achieved this score without using any tools. OpenAI's Sebastien Bubeck emphasizes that here:

Just to spell it out as clearly as possible: a next-word prediction machine (because that's really what it is here, no tools no nothing) just produced genuinely creative proofs for hard, novel math problems at a level reached only by an elite handful of pre‑college prodigies.

There's a bunch more useful context in this thread by Noam Brown, including a note that this model wasn't trained specifically for IMO problems:

Typically for these AI results, like in Go/Dota/Poker/Diplomacy, researchers spend years making an AI that masters one narrow domain and does little else. But this isn’t an IMO-specific model. It’s a reasoning LLM that incorporates new experimental general-purpose techniques.

So what’s different? We developed new techniques that make LLMs a lot better at hard-to-verify tasks. IMO problems were the perfect challenge for this: proofs are pages long and take experts hours to grade. Compare that to AIME, where answers are simply an integer from 0 to 999.

Also this model thinks for a long time. o1 thought for seconds. Deep Research for minutes. This one thinks for hours. Importantly, it’s also more efficient with its thinking. And there’s a lot of room to push the test-time compute and efficiency further.

It’s worth reflecting on just how fast AI progress has been, especially in math. In 2024, AI labs were using grade school math (GSM8K) as an eval in their model releases. Since then, we’ve saturated the (high school) MATH benchmark, then AIME, and now are at IMO gold. [...]

When you work at a frontier lab, you usually know where frontier capabilities are months before anyone else. But this result is brand new, using recently developed techniques. It was a surprise even to many researchers at OpenAI. Today, everyone gets to see where the frontier is.

Basically any resource on a difficult subject—a colleague, Google, a published paper—will be wrong or incomplete in various ways. Usefulness isn’t only a matter of correctness.

For example, suppose a colleague has a question she thinks I might know the answer to. Good news: I have some intuition and say something. Then we realize it doesn’t quite make sense, and go back and forth until we converge on something correct.

Such a conversation is full of BS but crucially we can interrogate it and get something useful out of it in the end. Moreover this kind of back and forth allows us to get to the key point in a way that might be difficult when reading a difficult ~50-page paper.

To be clear o3-mini-high is orders of magnitude less useful for this sort of thing than talking to an expert colleague. But still useful along similar dimensions (and with a much broader knowledge base).

Largest known prime number (via) Discovered on 12th October 2024 by the Great Internet Mersenne Prime Search. The new largest prime number is 2136279841-1 - 41,024,320 digits long.

2024

[… OpenAI’s o1] could work its way to a correct (and well-written) solution if provided a lot of hints and prodding, but did not generate the key conceptual ideas on its own, and did make some non-trivial mistakes. The experience seemed roughly on par with trying to advise a mediocre, but not completely incompetent, graduate student. However, this was an improvement over previous models, whose capability was closer to an actually incompetent graduate student.

An animated introduction to Fourier Series (via) Outstanding essay and collection of animated explanations (created using p5.js) by Andrei Ciobanu explaining Fourier transforms, starting with circles, pi, radians and building up from there.

I found Fourier stuff only really clicked for me when it was accompanied by clear animated visuals, and these are a beautiful example of those done really well.

2023

Google DeepMind used a large language model to solve an unsolvable math problem. I’d been wondering how long it would be before we saw this happen: a genuine new scientific discovery found with the aid of a Large Language Model.

DeepMind found a solution to the previously open “cap set” problem using Codey, a fine-tuned variant of PaLM 2 specializing in code. They used it to generate Python code and found a solution after “a couple of million suggestions and a few dozen repetitions of the overall process”.

2019

An Interactive Introduction to Fourier Transforms (via) I love interactive exploitable explanations and this is the best I’ve seen in a while: Jez Swanson breaks down exactly what a Fourier transform does, first by letting you interactively draw and deconstruct wave patterns and then by showing Epicycles andcexplsining JPEG compression. All with not a formula in sight!

2009

Mobius Sliced Linked Bagel. “It is much more fun to put cream cheese on these bagels than on an ordinary bagel. In additional to the intellectual stimulation, you get more cream cheese, because there is slightly more surface area.”

2004

Python in Mathematics

Python in the Mathematics Curriculum by Kirby Urner is something of a sprawling masterpiece. It really comes in four parts: the first is a history of computer science in education, the second an appraisal of the impact of open source on education and the world at last, the third a dive in to the things that make Python so suitable for enhancing the mathematics curriculum and the fourth a discussion of how computer science and traditional mathematics are likely to play off against each other in the field of high school education.

[... 319 words]2003

Python for teaching mathematics

Kirby Urner provides some great examples of how Python can be used as an aid to understanding mathematics on the marketing-python mailing list. I particularly liked this demonstration of Pascal’s triangle using Python generators:

[... 139 words]